The question about the difference between an absolute pressure and a gauge (relative) pressure measurement always pops up every now and again.

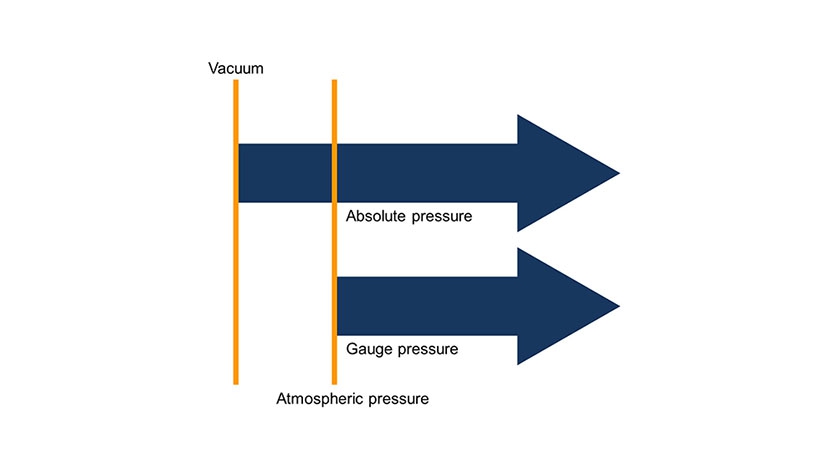

From a purely theoretical point of view, the question can be answered relatively easily: in a gauge pressure measurement (aka overpressure measurement), it is always the difference from the current ambient (atmospheric) pressure that is measured. However, this pressure changes with the weather and the altitude above sea level.

On the other hand, an absolute pressure measurement measures the difference from the ideal or absolute vacuum. This is why this measurement is independent of weather or altitude.

In practice, the difference between these two measurements is as follows:

In most cases, the measuring task is to determine the gauge pressure. This is why this type of sensor is most widely used. However, if a gauge pressure sensor is used in an application in which the actual measuring task is to measure the absolute pressure, the following additional errors must be expected:

- +/- 30 mbar caused by changes in weather

- up to 200 mbar when changing the location (e.g. from sea level to 2,000 m)

Depending on the measuring range, these errors can be substantial (e.g. in pneumatics at a measuring range of 1 bar) or negligible (in hydraulics at 400 bar).

If you are uncertain whether your measuring task requires an absolute pressure or gauge/relative pressure measurement, your contact person will be glad to assist you.